What Does It Mean to Have Predicted an Economic Event?

Consider the never-ending argument about whether certain economists did or did not predict the financial crisis in 2007-8. It raises all kinds of methodological questions in economics reaching back much further than Milton Friedman’s famous 1966 paper conceiving of economics as a predictive science.

Here’s the fundamental conundrum: what does it mean to have predicted something in economics?

Intuitively, it ought to be something like: if you claim something about the future state of the world and that empirically turns out to be correct, you predicted it.

There are a myriad of problems here:

- If I rolled a six a moment before the power went out, did my die predict the power outage?

- If I rolled a six two years before the power went out, did my die predict the power outage?

- If I rolled a six and the power somewhere, at some time, went out, did my die predict that power outage?

- If the power went out repeatedly the last few nights and I guessed it would go out that night again while rolling a six, did I predict that night’s power outage?

It is easy enough to spot many errors in these scenarios: there is no plausible relation between power outages and rolling of dice; including any outcome anywhere in my silly prediction is not evidence of success; making a correct extrapolation of the recent past and tying it to rolling a six does not vindicate the power of my rolling arm.

There are a number of criteria a successful prediction must meet:

- It must be precise, not general. Predicting rain in London or earthquakes in California in general (or over some lengthy time period) is useless. By historical averages and common sense, we are fairly confident they will happen again.

- It cannot be mere extrapolation of recent past. The prediction that yesterday’s weather will persist today actually performs fairly well when tested because weather systems are frequently stable rather than because any forecaster has particular skill.

- It must be underlain by a plausible theory that explains and accounts for the empirical outcomes we observe — or at least point to strong statistical association between some relevant variables.

- It may not be an “accidental generalization,” in which observations happen to agree with the forecast but carry no law-like relationship — it must not be what normal people might call coincidence.

- It must be communicated in advance.

- It must be replicable and continue to predict future instances.

You may add to or tweak the criteria somewhat, but the gist of it points to a serious problem in all of economics: how can we ever predict anything? Nate Silver writes in his superb The Signal and the Noise:

What happens in systems with noisy data and underdeveloped theory — like earthquake prediction and parts of economics and political science — is a two-step process. First, people start to mistake the noise for a signal. Second, this noise pollutes journals, blogs, and news accounts with false alarms, undermining good science and setting back our ability to understand how the system really works. (p. 162)

One vocal advocate of the “I predicted it”delusion is the Australian economist Steve Keen, who has repeatedly made the case that weather forecasting is analogous to (or at least inspirational for) forecasting in economics and finance — and the huge improvement in weather forecasting ought to give economists similar tools to perform our science. Silver again:

Weather forecasters have a much better theoretical understanding of the earth’s atmosphere than seismologists do of the earth’s crust. They know, more or less, how weather works, right down to the molecular level. Seismologists don’t have that advantage. (p. 162)

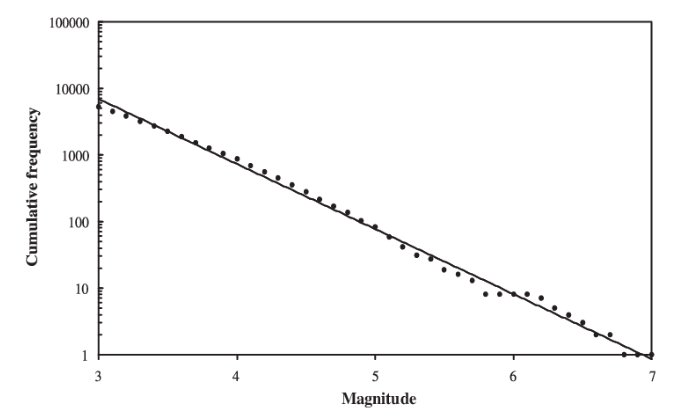

Neither do economists. Moreover, in economics, we have nothing like the Gutenberg-Richter law, which expresses a logarithmic relationship between the frequency and the magnitude of earthquakes. (See the figure below.)

Seismologists may not yet know all of the fundamental laws underlying when and where earthquakes take place, but they have a myriad of close-to-identical observations and a compelling tool for structuring those observations in the form of the Gutenberg-Richter law. Such a tool allows them to draw informative conclusions about risk profiles for various geographical areas and the frequency of, say, a magnitude 7+ earthquake in San Francisco over the next 30 years (about one earthquake).

At this point, an informed objection might be: couldn’t economists do the same thing for financial crises? After all, we have an avalanche of data over more than a century for a staggering number of financial crises. Let’s try.

There are at least three additional problems economists face that seismologists don’t:

1) Definitions

Seismology has a pretty clear idea of what an earthquake is and what it looks like in real life. In contrast, economists can’t agree on what qualifies as a financial crisis. To give a vivid example from a literature I’m familiar with, when Charles Calomiris and Stephen Haber count banking crises since 1800, the U.S. has had a miserable 8 while their poster child for financial stability, Canada, has had 0. Two well-regarded Harvard economists, Carmen Reinhart and Kenneth Rogoff, use a somewhat-looser definition of what constitutes a banking crisis, tallying up 13 instances in the U.S. since 1800, and Canada’s supposedly flawless record jumps to 8 crises — even worse than your average Western European country. Clearly, definitions and cutoff points matter greatly.

In other words, where seismologists have a pretty clear yardstick for and knowledge of every single earthquake on the globe larger than a magnitude 5 since the 1960s, economists’ quibbles over what constitutes a crisis radically change the discussion and put us even further behind; we can’t even agree on what the thing we’re attempting to predict looks like.

Moreover, the sheer number of observations gives seismologists much better data to work with. Every year sees thousands of earthquakes of magnitude 5 or higher (and millions of smaller ones) whereas financial crises fortunately are much less common. Indeed, the Macrohistory Database, assembled by Òscar Jordá, Moritz Schularick, and Alan Taylor, covers “systematic financial crises” in 17 developed countries for which we have data over the last 150 years, and contains no more than 90 crises in total — not per country. That’s roughly the number of earthquakes of magnitude 5 or higher in the United States alone in an average year.

2) Identical observations

Some of the exact laws underlying seismology may still elude those studying them, but nobody believes that their ultimate causes have changed over time. The movements of tectonic plates are the same today as they were 200 years ago.

Nobody would presume that the same thing holds for financial crises. Social and economic regimes impact the workings of society and our markets. How a particular mid-19th-century financial crisis was shaped and created by contemporary rules, technology, and institutions is incomparable to how current monetary institutions or workings of financial markets influence and propagate crises in the present.

What economists are trying to get at in formulating business cycle theories or predicting financial collapse may very well be whatever timeless underlying forces govern our investing behavior (i.e., animal spirits, the financial-instability hypothesis, the efficient markets hypothesis, or the latest fad, behavioral finance), but the observed outcomes differ entirely. This identifying difficulty makes our already-tricky definitional problems that much worse.

3) Feedback and Change

In contrast to earth sciences, where the pressure building up in fault lines cares very little for the musings of seismologists, economic actors often change their behavior when new regularities are identified and publicized. In economics, this has become known as Goodhart’s law (after the former London School of Economics professor and banking scholar Charles Goodhart), which famously explained how the 1970s attempt at basing monetary policy on monetary aggregates broke down as previously identified relationships disappeared when the measure became a policy target.

This phenomenon has many different names: feedback, endogeneity, reflexivity. Not to mention that whether something becomes a crisis reflects human-made responses and public policy in a sense that earthquake frequency and magnitudes don’t.

Economists are, as the philosopher of economic science Daniel Hausman often points out, blessed with “plausible, powerful, and convenient” postulates but “cursed with the inability to learn much from experience,” mostly because of the ridiculously noisy data.

Economists have much worse and noisier data than seismologists; we can’t even remotely agree on what the thing we are predicting really looks like or the shapes it will take; the forces impacting what we’re predicting change dramatically over time, which is very bad considering our lack of sufficient observations; and any regularity or statistical association we may find is bound to change through feedback, and is thus unusable for prediction.

Seismologists have better theory and much better data than economists. Seismologists can’t predict earthquakes. Economists can predict financial meltdowns? Please.