Professor Lockdown Now Claims to Have Saved 3.1 Million Lives

The problem of causal inference presents one of the great challenges of empirical analysis. While it is relatively easy to find patterns in data that appear to move over time in response to overlapping events, it is much harder to show that those events specifically caused the data to move as expected.

Think about how presidents often cite positive economic data such as GDP growth or the stock market as vindication of their own economic policies. Prior to early March 2020 this was a favorite tweeting topic of Donald Trump, although his predecessors almost all made similar claims. While this argument makes for a useful campaign pitch, it is poor social science and would never pass empirical scrutiny.

To solve the causal inference problem, social scientists usually enlist sophisticated statistical tools that are designed to isolate the effects of a known event on a data trend or pattern by comparing it to an independent or unaffected counterfactual. With rigorous testing and good data, they may infer causality by comparison with natural experiments, usually involving ruling out a series of alternative explanations.

To use a concrete example, consider the ongoing question about the effectiveness of the COVID-19 lockdown policies employed in several U.S. states as well as other countries. A causal inference test of the lockdowns would require clear evidence of different outcomes between states that adopted shelter-in-place rules and states that did not. Given the complex multitude of confounding variables affecting COVID-19 transmission and mortality rates, isolating causality from the lockdown policies is no easy task.

That brings us to a new report published in the journal Nature by the epidemiology team at Imperial College-London (ICL), including Neil Ferguson of some fame. This is the same epidemiology research center whose agent-based simulation model convinced the American and British governments to switch to a lockdown strategy.

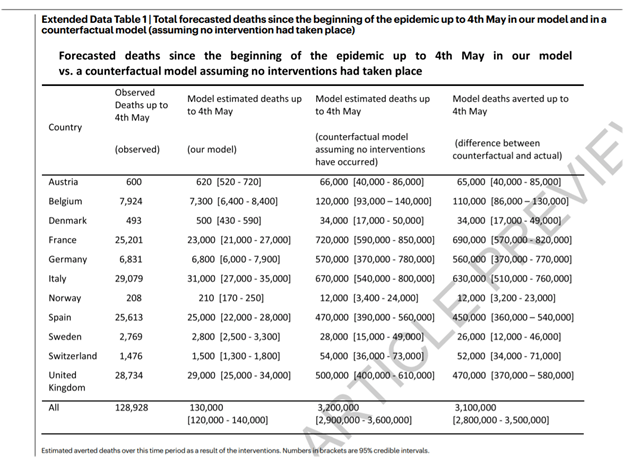

The study purports to demonstrate “that major non-pharmaceutical interventions and lockdown in particular have had a large effect on reducing transmission” of the COVID-19 virus. The paper and an accompanying press release from the university put numbers to this claim, asserting that the lockdowns saved an estimated 3.1 million lives in Europe.

Although this headline-grabbing claim will likely be treated as a vindication of the lockdown approach by its political supporters, a closer look at the analysis suggests the Imperial College team reached this conclusion without offering a viable causal inference strategy. As they describe in the paper, “By comparing the deaths predicted under the model with no interventions to the deaths predicted in our intervention model, we calculated the total deaths averted in our study period.”

Put differently, the epidemiologists reached their estimates by taking the difference between observed deaths and their own agent-based simulation of COVID interventions, including the lockdowns. They then depict the difference as if it demonstrates the validity of their own simulation model, despite providing no evidence that their original simulation was correct.

Table 1 from the study shows this basic arithmetic exercise across 11 different European countries:

The problem with this approach is that it attempts to imply causality by attributing the observed death tolls to the effectiveness of the lockdowns, which they then claim to demonstrate through nothing more than a self-referential appeal to their own simulation model for a “no intervention” counterfactual.

If that line of argument sounds familiar, it’s because Donald Trump beat the Imperial College team to the punch. Citing the now-infamous March 16th ICL report by Imperial’s Neil Ferguson, the American president now regularly claims vindication for his own support of the lockdowns on account of the difference between its 2 million-plus projected death toll and the actual count of just over 100,000 as of this writing.

As Trump tweeted on May 26,

“For all of the political hacks out there, if I hadn’t done my job well, & early, we would have lost 1 1/2 to 2 Million People, as opposed to the 100,000 plus that looks like will be the number.”

Whether used by Imperial College or Trump, this line of argument falters as social science because it assumes the validity of the very same forecast it purports to demonstrate. Rather than testing the causal inference that lockdowns reduced the COVID death rate, it takes their own forecasted death rate as a given and then purports to calculate the number of lives saved by simple subtraction from its own model.

That approach only works if one begins from the assumption that the Imperial College’s “no intervention” model is axiomatically true. The new paper essentially acknowledges as much in noting that “the counterfactual model without interventions is illustrative only and reflects our model assumptions.” Rather than investigating this seemingly-crucial assertion further, let alone subjecting it to empirical testing, the authors indulge in a handwaving exercise that simply declares: “Given this agreement in differing scenarios we believe our estimates for the counterfactual deaths averted to be plausible.”

This conclusion is rendered untenable by the many already-documented flaws in the original ICL model. That model failed to account for the effects of human behavioral modification on its results. It neglected to run robustness checks on its own assumptions about the effectiveness of lockdowns. It resulted in preposterously unrealistic death toll projections when applied to Sweden, which eschewed lockdowns for a lighter-touch policy. And there is even evidence that the ICL model design has no means of accounting for nursing home transmission of the disease – the largest single factor in COVID-related deaths.

These and other findings provide several reasons to doubt the main causal mechanism of the ICL model, linking lockdowns to sizable claims about the reduction of COVID fatalities.

The result is not a valid exercise in social scientific analysis, nor is it even an empirically robust test of the ICL model’s performance. Like Trump’s tweeting about both the economy before March 2020 and his own claimed role in reducing COVID deaths after the lockdowns, it is an exercise in statistical astrology.

Sadly, unlike Trump’s tweets, however, the ICL managed to convince Nature, a top journal in the profession, to publish these unfounded claims.